This notebooks demonstrates the storaage and retrieval of complex datatypes (blobs) and file attachments in DataJoint.

A blob refers to an attribute in a table that can store complex data structures such as numeric arrays.

An attachment refers to an attribute that can store an entire file with its filename, etc.

Both blobs and attachments can be stored directly in the tables of the relational database or in configurable external “stores” such as network-attached storage servers or object storage systems such Amazon S3 and Minio.

Many of these features existing in prior releases of datajoint but have been substantially expanded in version 0.12.0.

%matplotlib inline

from IPython import display

from matplotlib import pyplot as plt

import os

import imageio

import requests

from ipywidgets import Image

import ipywidgets

import numpy as np

import datajoint as djdj.__version__'0.12.dev7'Configure stores¶

The following is a configuration defining two external stores. This should only be done once for all users and the configuration file must be saved and provided to all users.

The first store is named "shared" and is hosted on an S3 endpoint.

The second store is named "local" and it uses the local path ./dj-store.

Now these repositories can be used for blobs and attachments.

## Storage configuration

# set up stores

dj.config['stores'] = {

'shared': dict(

protocol='s3',

endpoint='localhost:9000',

access_key='datajoint',

secret_key='datajoint',

bucket='datajoint-demo',

location=''

),

'local': { # store in files

'protocol': 'file',

'location': os.path.abspath('./dj-store')

}}# create a schema for this demo

schema = dj.schema('test_attach')Connecting dimitri@localhost:3306

schema.drop() # drop if exists to create anewProceed to delete entire schema `test_attach`? [yes, No]: yes

# create a schema for this demo

schema = dj.schema('test_attach')A Minimal example of blobs and configurable blobs¶

Let’s declear the table Test with blobs and attachments stored intrnally and externally.

@schema

class Test(dj.Manual):

definition = """

# Test blob and attachments

id : int

---

b0 : longblob # a python object stored internally in the table

b1 : blob@shared # a python object stored on S3

b2 : blob@local # a python object store on the file system

a0 : attach # a file attachment stored internally in the table

a1 : attach@shared # a file attachment stored on s3

a2 : attach@local # a file attachment stored on the file system

"""# Create three numpy arrays as save them in different files

q0, q1, q2 = np.random.randn(3,4), np.random.randn(7), np.random.randn(2, 3, 4)

f0, f1, f2 = './outfile0.npy', './outfile1.npy', './outfile2.npy'

np.save(f0, q0)

np.save(f1, q1)

np.save(f2, q2)Test.delete()About to delete:

Nothing to delete

# insert the blobs and the attachments into the table

Test.insert1(dict(id=1, b0=q0, b1=q1, b2=q2, a0=f0, a1=f1, a2=f2))Test()# delete the attached files

os.remove(f0)

os.remove(f1)

os.remove(f2)# now fetch them and verify that they retrieved correctly

result = Test.fetch(as_dict=True)result[0]['b0']array([[-0.03270063, 1.48132664, -0.47460454, 0.53584166],

[-0.54839705, 0.06459001, -0.12448809, 0.1326574 ],

[ 0.68458856, 0.36013697, 0.00544484, 0.30176943]])q0array([[-0.03270063, 1.48132664, -0.47460454, 0.53584166],

[-0.54839705, 0.06459001, -0.12448809, 0.1326574 ],

[ 0.68458856, 0.36013697, 0.00544484, 0.30176943]])np.array_equal(q0, result[0]['b0'])Trueresult[0]['a1']PosixPath('outfile1.npy')np.load(result[0]['a1'])array([ 1.24891915, -1.16716244, -0.55109167, -0.45738901, -0.68241222,

-0.23278837, 1.23499246])q1array([ 1.24891915, -1.16716244, -0.55109167, -0.45738901, -0.68241222,

-0.23278837, 1.23499246])schema.externalExternal file tables for schema `test_attach`:

"shared" s3:"

"local" file:/home/dimitri/dev/db-programming-with-datajoint/notebooks/dj-store"schema.external['local']schema.external['shared']schema.external['local'].fetch_external_paths()[(UUID('1faa3094-d718-075c-9c94-f1a6c0505c55'),

PurePosixPath('/home/dimitri/dev/db-programming-with-datajoint/notebooks/dj-store/test_attach/1f/aa/1faa3094d718075c9c94f1a6c0505c55.outfile2.npy')),

(UUID('784db39e-7622-9539-a896-d3afb854250c'),

PurePosixPath('/home/dimitri/dev/db-programming-with-datajoint/notebooks/dj-store/test_attach/78/4d/784db39e76229539a896d3afb854250c'))]schema.external['shared'].fetch_external_paths()[(UUID('013ac743-ddf5-afc8-bf7d-40fcf3754758'),

PurePosixPath('test_attach/01/3a/013ac743ddf5afc8bf7d40fcf3754758.outfile1.npy')),

(UUID('90dccd2e-fb2a-02c5-8556-f494b7a4ac57'),

PurePosixPath('test_attach/90/dc/90dccd2efb2a02c58556f494b7a4ac57'))]schema.external['shared'].used()schema.external['local'].used()schema.external['shared'].unused()Test.delete()About to delete:

`test_attach`.`test`: 1 items

Proceed? [yes, No]: yes

Committed.

schema.external['shared']schema.external['shared'].delete() # deleted100%|██████████| 2/2 [00:00<00:00, 74.11it/s]

[]# cleanup

for s in schema.external.values():

s.delete()0it [00:00, ?it/s]

100%|██████████| 2/2 [00:00<00:00, 70.79it/s]

Lookup of images on the web¶

We create a lookup table, WebImage to point to some images available on the web

@schema

class WebImage(dj.Lookup):

definition = """

# A reference to a web image

image_number : int

---

image_name : varchar(30)

image_description : varchar(1024)

image_url : varchar(1024)

unique index(image_name)

"""

contents = [

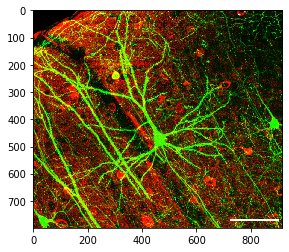

(0, "pyramidal",

'Coronal section containing the chronically imaged pyramidal '

'neuron "dow" (visualized by green GFP) does not stain for GABA '

'(visualized by antibody staining in red). '

'Confocal image stack, overlay of GFP and GABA channels. '

'Scale bar: 100 um',

"https://upload.wikimedia.org/wikipedia/commons/d/dc/"

"PLoSBiol4.e126.Fig6fNeuron.jpg"

),

(1, "striatal",

"Mouse spiny striatal projection neuron expressing a transgenic "

"fluorescent protein (colored yellow) delivered by a recombinant "

"virus (AAV). The striatal interneuron are stained in green for "

"the neurokinin-1 receptor.",

"https://upload.wikimedia.org/wikipedia/commons/e/e8/"

"Striatal_neuron_in_an_interneuron_cage.jpg"

)

]Preview the images directly from the web¶

Image.from_url((WebImage & 'image_number=0').fetch1('image_url'))Image.from_url((WebImage & 'image_number=1').fetch1('image_url'))Define a table with attachments¶

Now we can use the stores to define attachment attributes in the form attribute_name : attach@store # comment where the store is either @local or @shared as defined above.

Let’s define the table OriginalFile to automatically download and attach files from WebImage and stores the attachments in the shared store.

@schema

class OriginalFile(dj.Imported):

definition = """

-> WebImage

---

image_file : attach@shared

"""

def make(self, key):

# get the URL

url = (WebImage & key).fetch1('image_url')

# download the file from the web

local_file = os.path.join(os.path.abspath('.'), url.split('/')[-1])

with open(local_file, 'wb') as f:

f.write(requests.get(url).content)

# attach the file

self.insert1(dict(key, image_file=local_file))

# delete the downloaded file

os.remove(local_file)dj.Diagram(schema)# perform the download

OriginalFile.populate()OriginalFile()# preview downloaded attachment

file = (OriginalFile & 'image_number=1').fetch1('image_file')

Image.from_file(file)os.remove(file)Extract images into blobs¶

Now let’s define another class that extracts imags from attached files and stores as blobs in the local store.

# Declare a table with a configurable blob

@schema

class Slide(dj.Computed):

definition = """

-> OriginalFile

---

image_array : blob@local # array in specified store

"""

def make(self, key):

# get the attached file

file = (OriginalFile & key).fetch1('image_file')

# save image data

self.insert1(dict(key, image_array=imageio.imread(file)))

# remove the downloaded file

os.remove(file)Slide.populate()Slide()schema.externalExternal file tables for schema `test_attach`:

"shared" s3:"

"local" file:/home/dimitri/dev/db-programming-with-datajoint/notebooks/dj-store"schema.external['local']# Plot image form a blob

plt.imshow((Slide & 'image_number=0').fetch1('image_array'));

Caching¶

By default, the data from blobs and attachments are retrieved from remote stores with every fetch command. For repeated queries, a cache folder may be specified to improve performance and reduce cost of operations. After the first fetch of a given blob or attachment, it will be read from the cache.

# configure the cache

dj.config['cache'] = './dj-cache'# clear the cache for the timing test

import shutil

if os.path.isdir(dj.config['cache']):

shutil.rmtree(dj.config['cache'])%%timeit -n1 -r1

# first time no cache

files = OriginalFile.fetch('image_file')17.7 ms ± 0 ns per loop (mean ± std. dev. of 1 run, 1 loop each)

%%timeit -n1 -r1

# now with cache

files = OriginalFile.fetch('image_file')11.4 ms ± 0 ns per loop (mean ± std. dev. of 1 run, 1 loop each)

Deleting¶

Deleting from tables using external storage is just as simple and transaction-safe as with all other kinds of attributes. Simply use the delete method:

schema.externalExternal file tables for schema `test_attach`:

"shared" s3:"

"local" file:/home/dimitri/dev/db-programming-with-datajoint/notebooks/dj-store"schema.external['shared']schema.external['shared'].unused()(WebImage & 'image_number=0').delete()About to delete:

`test_attach`.`__slide`: 1 items

`test_attach`.`_original_file`: 1 items

`test_attach`.`#web_image`: 1 items

Proceed? [yes, No]: yes

Committed.

schema.external['shared'].unused()Deleting¶

For the sake of performance, deleting from the data tables does not remove the data from external storage.

The delete method of the external table deletes its unused entries and their corresponding external files.

schema.externalExternal file tables for schema `test_attach`:

"shared" s3:"

"local" file:/home/dimitri/dev/db-programming-with-datajoint/notebooks/dj-store"You may cleanup the external table using its delete method. It is a transaction-safe operation and can be performed at any time.

schema.external['local'].delete()100%|██████████| 1/1 [00:00<00:00, 47.74it/s]

[]schema.external['shared'].delete()100%|██████████| 1/1 [00:00<00:00, 66.55it/s]

[]for s in schema.external.values():

s.delete()0it [00:00, ?it/s]

0it [00:00, ?it/s]

schema.external['shared'].used()schema.external['shared'].unused()